This article is part of our CS101 series, created to help early-stage developers and students build strong mental models for the tech they’re learning. In this post, we turn our focus to Deep Learning—specifically the new generation of tools and frameworks that help you not just train models, but understand them.

Have You Heard of Software 2.0?

Before we dive into the treasure trove of tools and tips we’ve gathered for you today, a quick pulse check—have you heard of the term Software 2.0? Coined by Andrej Karpathy in 2017, it describes a fundamental shift: instead of explicitly programming behavior line-by-line (Software 1.0), we now train neural networks to learn that behavior from data. Instead of code, we write datasets. Instead of logic trees, we design architectures and loss functions. And instead of debugging conditionals, we tune hyperparameters and examine gradients. Karpathy argued that neural networks aren’t just tools—they are a new way of programming. And in many domains, they’re winning.

🧑🚀 One-Person Frameworks: The Solo Stack Renaissance

Let’s talk about something beautifully personal: the return of the one-person framework. In a time where tools like Rails, Laravel, and even Flask gave solo developers full creative control, we’re now seeing a renaissance—especially in the Python world. One developer, one framework, one deployable product.

Inspired by stories like this Rails journey to €1M ARR, I’ve been thinking a lot about what this means for us in the age of AI. The core principle here is self-sufficiency: being able to take an idea from sketch to shipping, from logic to layout, without coordinating five teams. And for me, that freedom currently lives in the Pythonic joyride of FastAPI + HTMX—a stack that feels like it was written by Parseltongues 🐍.

FastAPI you probably know—it’s modern, type-safe, and makes backend APIs feel like a breeze. But let me wax lyrical for a second about HTMX: it’s a tiny JavaScript library that lets you write less JavaScript. HTMX extends HTML with extra powers so that you can handle most dynamic interactions (think: search bars, live reloads, form submissions) by just adding attributes to your HTML. It’s like sprinkles of interactivity without the SPA complexity. You get server-driven UI without the overhead of full-blown frontends—and in the age of LLM-powered autocode and server-side dominance, it just makes sense.

But it’s not without controversy. A recent criticism on Reddit reminded me to re-examine my favorite pairing. The points raised—around debugability, component reuse, and tooling ergonomics—are worth considering. Yet in contrast, here’s a glowing tale of success from someone who found the sweet spot of shipping fast and keeping sane.

Final Verdict

As for me? My go-to stack remains FastAPI + HTMX + Tailwind CSS. I find that many of the concerns raised—around templating and scalability—are well addressed by leaning into FastAPI’s Jinja templating engine. And Tailwind? It’s just enough frontend magic to keep things looking sharp without spiraling into JavaScript hell. For solo developers who want flexibility without losing control, this trio still hits the sweet spot.

So what do you think, Techies? Have you tried a solo stack? Is the Pythonic one-person framework dream alive in 2025? Let us know in Slack.

🔧 Tools to Build, Trace, and Think

No Drama, Trust in LLaMA

Have you been paying attention to Meta’s LLaMA lineup lately? It might not make as much noise as ChatGPT or Gemini, but it’s quietly becoming one of the most powerful open-source contenders out there. While OpenAI, Perplexity, and Anthropic dominate headlines, LLaMA is slipping into real workflows and side projects with surprisingly strong results. If you’re looking to inject some serious firepower into your AI experiments without getting locked into proprietary ecosystems, it’s worth a look.

If you’re new to the world of LLaMAs, you might want to check out highlights from the first-ever LlamaCon. Meta used the event to unveil exciting new tools and programs—from an interactive LLaMA API playground to security-focused tooling like LLaMA Protection Tools and the LLaMA Defenders Program. They also announced the latest LLaMA Impact Grants, awarding over $1.5 million to global projects using open models to drive real-world innovation. If you’re looking for inspiration (or a reason to get hyped), it’s a great place to start.

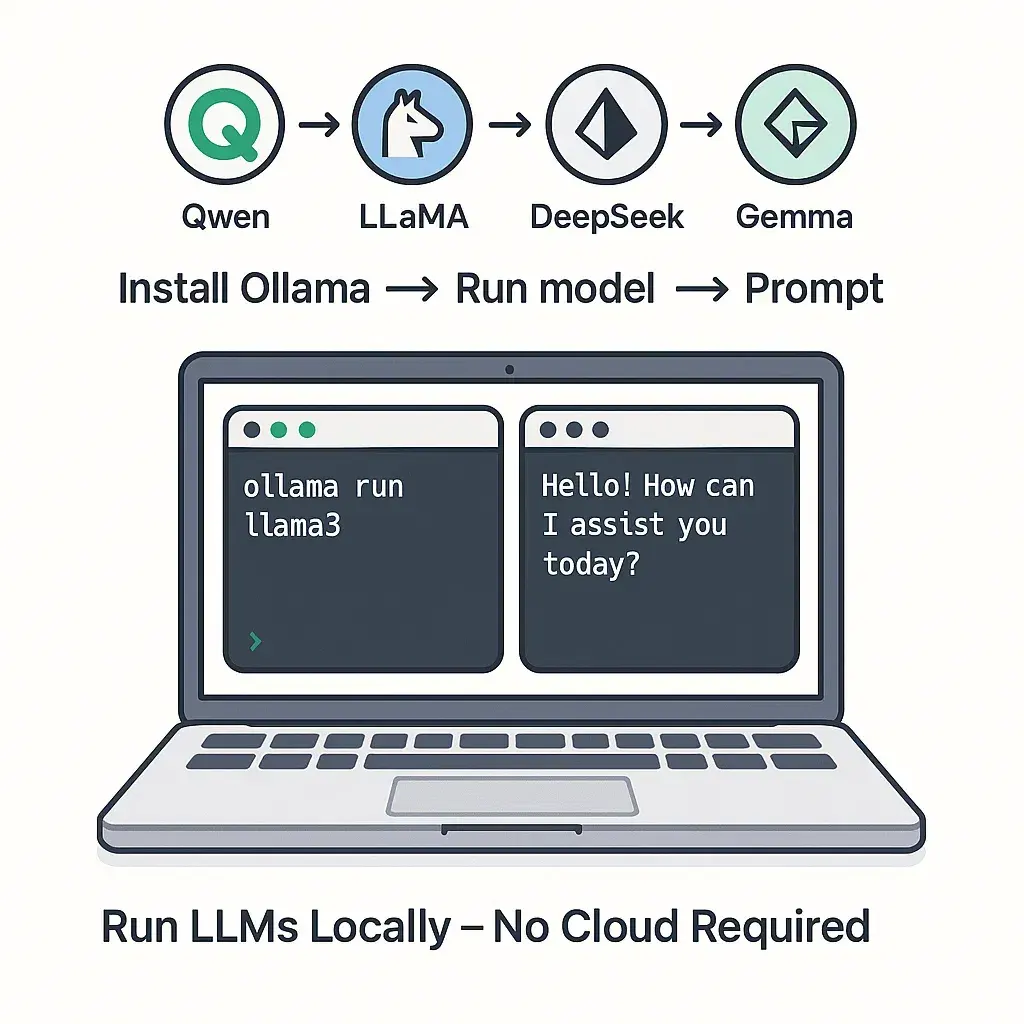

Don’t Have Cloud Credits? Try LLaMA on Your Machine

Thanks to Ollama, you can now run models like DeepSeek-R1, Qwen 3, LLaMA 3.3, and others locally on your laptop. It’s a lightweight, dev-friendly way to experiment with LLMs—without needing an API key or GPU cluster.

Skip the fluff and dive straight into their GitHub repo

Just install, load a model, and start prompting—all in your terminal. No billing dashboard, no vendor lock-in.

Speaking of More Tools and GitHub Repos

Here are two handpicked gems we think every Deep Learning student should check out:

SmolML is a tiny machine learning library built entirely in pure Python—no NumPy, no SciPy, no external C. It’s not about performance, but about understanding. You can walk through linear regression, backpropagation, gradient descent, and even simple neural networks, line by line. Great for learning or teaching ML fundamentals.

This tiny Python library adds a @task decorator to your functions and automatically creates execution flowcharts. It’s perfect for students working on multi-step scripts or pipelines who want to visualize what’s going on without getting bogged down in full ML ops tooling.

🧭 Outro - Final Thoughts

That’s a wrap—for now. We hope this roundup gives you a few new tools, ideas, and rabbit holes to explore. Whether you’re building, debugging, or just experimenting, remember that the right tool isn’t just about speed—it’s about clarity.

💡 Bonus Tip: Many of our favorite GitHub gems came from the Data Elixir Newsletter. If you aren’t subscribed yet, fix that—it’s a weekly goldmine for data science nerds like us.

Sources & Links

- Software 2.0 – Andrej Karpathy

- The One-Person Framework in Practice (Rails)

- FastAPI + HTMX Stack Example

- HTMX Reddit Criticism

- LLaMA Homepage

- Takeaways from LlamaCon

- LLaMA Impact Grant Recipients – Meta

- LLaMA Protections & AI Defenders Program

- Ollama – Local LLM Deployment

- Ollama GitHub Repo

- SmolML – Minimal ML Library

- Flowshow – Python Task Visualizer